Cameras can achieve accurate 3D perception that can range out to a kilometer.

The promise of broadly deploying safe and effective automated driving is a game-changer that can reduce road fatalities, more efficiently deliver goods, and enable new business opportunities, with the most critical enabler being robust dense depth perception in all conditions.

From a safety perspective, the problem is urgent. The US hit a 16-year high in traffic deaths in 2022 and pedestrian fatalities reached their highest level in 40 years, with nighttime pedestrian deaths rising by 41% since 2014. Road accidents continue to be a leading cause of death (1.3M) and injuries (20-50M) estimated worldwide.

Automation also has economic benefits, such as to help address the worldwide truck driver shortage. Today there are an estimated 2.6M unfulfilled driver positions across the 25 countries surveyed, with shortages of 80,000 in the US, nearly 400,000 across Europe, and 1.8M in China. This is expected to significantly grow due to a lack of skilled workers, an aging driver population, and waning interest in the profession due to quality-of-life issues.

The perception stack is the most crucial element of the AV or ADAS system for improving vehicle safety. It ingests sensor data and interprets its surroundings through an ensemble of sophisticated algorithms that must provide robust detection and accurate distance estimation of objects in all lighting and weather conditions (including rain, fog, and snow) and in city or highway routes. It must have sufficient range to provide time for the system or driver to respond to an issue. At highway speeds, this needs to be out to 300m for passenger vehicles and over 500m for Class 8 trucks for them to safely brake or maneuver to avoid an accident. The stack then delivers the information needed for the vehicle to warn a driver or make good control decisions itself.

These perception systems use cameras, radar, and—increasingly—lidar sensors, which all have pros and cons. Cameras are the most dominant sensor and provide rich high-resolution information at low costs but require lengthy manual ISP tuning and struggle with low light. Radars are mature sensors and provide active distance and velocity measurement, but have low resolution, field of view, and a range of about 200 to 300m. Lidars are also active distance sensors robust to low light with higher resolution than radar, but are the most expensive sensors, are impacted by fog, rain, and snow, and have a practical range of about 200m.

While there won’t be one “magic” sensor, what if you could get cameras to achieve robust accurate 3D perception that can range out to a kilometer with dense depth estimation, real-time adaptive online calibration for multi-camera configurations, and low system development and deployment costs vs. other much more expensive sensors?

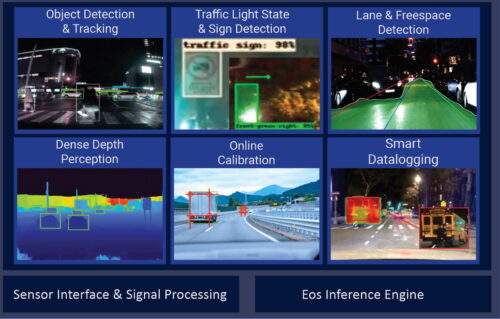

Algolux is a recognized pioneer in the field of robust perception software for ADAS and AVs and applies deep learning artificial intelligence (AI), computer vision, and computational imaging to deliver dense and accurate multi-camera depth and perception. It has validated and deployed solutions for both car and truck configurations with leading OEMs and Tier 1 customers.

The recently announced Eos Robust Depth Perception Software from Algolux has been recognized as addressing the range, resolution, cost, and robustness limitations of the latest lidar, radar, and camera-based systems combined with a scalable and modular software perception suite.