Algolux Atlas maximizes image quality and computer vision results.

We are surrounded by cameras and displays, from those in our pockets to security cameras to our cars, and we’ve enjoyed the benefit of amazing improvements in image quality with each new generation of these systems. Those benefits come from ongoing innovations in camera sensors, display technologies, and the image signal processing (ISP) pipelines that take the RAW sensor data and convert it to something pleasing to view.

ISP parameters are traditionally hand-tuned by expert imaging teams over many months to produce visually good images, something done for every new lens and sensor configuration. Of course, these subjectively good results are determined by the imaging team, the product owner, and—ultimately—everyone who looks at the images or video being displayed.

But what about computer vision (CV), such as in the systems that can prevent a car crash, identify people, or enable your phone to put a mustache filter on your face? These CV models use the same ISP output, but the accuracy of the vision models is actually being negatively impacted by the hand-tuning of the ISP.

ISPs are designed based on our understanding of the human eye and limitations of display devices, essentially removing information from the rich RAW sensor data. But CV models are not limited like the human eye, with dynamic range being one good example. Based on the CV model’s architecture, training, and function (e.g., object detection, segmentation, depth sensing, etc.), each model expects certain image quality characteristics that result in optimal vision results. The only way to achieve this is through objective optimization of the ISP parameters to maximize the vision model accuracy metrics (KPIs) rather than manually tuning for the “best” subjective image quality.

This multi-object ISP hyper-parameter optimization problem has been very difficult to solve. The ISP parameter space is massive and highly non-convex (e.g., having a great many local minima) with which stochastic and other solvers struggle. Algolux has cracked this problem with a cloud-based tool and workflow called the Atlas Camera Optimization Suite.

Atlas enables camera imaging and perception design teams to automatically optimize sophisticated ISPs to maximize vision results in their vision systems in a matter of days. It can also be used by those teams to significantly reduce the time and effort of tuning camera systems for visual image quality, so the teams can focus on the final subjective fine-tuning.

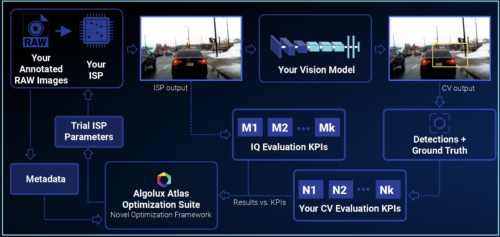

The process starts with your team capturing and annotating a small dataset of RAW images from the lens/sensor module being used for the vision system representing the application (e.g., object classes, illumination, etc.). This can typically be done using a couple of hundred images for the optimization dataset and a few thousand for the validation dataset. If visual image quality is being optimized (or both CV and image quality), then RAW lab chart captures are required.

Atlas first pushes the optimization dataset through the ISP vendor’s bit-accurate model integrated with Atlas. Atlas directs the processed images to your target pre-trained computer vision model(s), and results are evaluated against the CV’s KPIs. Atlas then determines a new parameter set and repeats the process, intelligently searching the rugged ISP parameter space to find an optimal set that maximizes the KPIs.

This process can be applied to any vision system and has been proven in many customer engagements, resulting in significant improvement in computer vision performance vs. hand-tuned ISPs. Learn more from this case study and by connecting with Algolux.