In 1964, Texas Instruments introduced the SN5400 TTL logic family and so started the six-decade dominance of digital logic in compute applications. From early ALUs to today’s multithreaded CPUs, digital logic is pervasive. Digital logic is relatively cheap, it scaled according to Moore’s law (up until recently), is easy to design with, and has been an efficient solution for most applications, until now. Artificial intelligence (AI) has changed the game, especially at the Edge.

Edge AI enables exciting new use-cases in many market segments like smart mobility, augmented reality/virtual reality (AR/VR), wearables, and health technology. Many of these applications are portable, battery-based, consumer-oriented products, which places severe requirements on energy efficiency, heat dissipation, physical size, and cost.

The reemerging field of analog compute

Highly sequential Von Neumann digital processors rely on Moore’s Law to deliver increasing improvements but have presented a serious power problem for Edge applications. Always-on voice, audio, and image processing are compute- and power-intensive for digital processors. The compelling alternative approach to digital for Edge AI is analog compute, which draws inspiration from the evolution of brain power.

Work smarter, not harder

Brains are the ultimate analog computers. The most impressive model is the human brain, which has 100 billion neurons, 1 quadrillion (1015) synapses, and runs on only ~12 watts of power. By comparison ChatGPT3 implementations, using GPUs and memory, have been estimated to consume ~260 MWh/day (that’s ~3kW of power every second), resulting in a solution over 250 times less efficient than the human brain just to perform language processing.

To mimic the brain and close the efficiency gap, we need to move from classical digital compute to an ultra-low power approach, such as all-analog processing. All-analog bio-chemical compute is still in the domain of research, but electronic based analog compute is real, and the results being achieved are impressive. Blumind, a leader in analog compute for Edge AI, achieves <1µW power for always-on key word detection and about twice that for visual trigger detection vs. 100s of µW to mW for the rest of the industry.

Less is more

In digital systems, power consumption has two aspects: static power associated with leakage and dynamic power associated with the capacitive charging/discharging of internal nodes. Every time a digital circuit switches, only one bit of information is processed using the entire power supply domain, leading to huge power inefficiency.

Power for digital rail-to-rail transitions is ½CV2F (where C=capacitance, V=voltage, F=frequency). Digital systems are power intensive because, in addition to bit-wise processing energy inefficiency, they are clocked at high frequency to achieve results in an acceptable time. By comparison, analog compute is performed at lower voltages and process 10s or 100s of bits of information per transition, thereby leading to orders of magnitude improved power efficiency. In addition, analog compute is inherently highly parallel resulting in low latency for real-time classification.

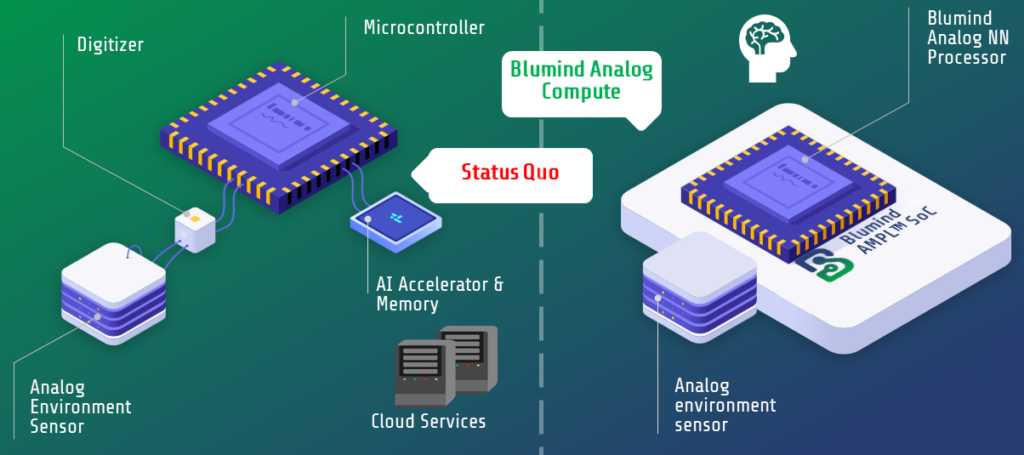

All analog

True all-analog solutions avoid analog-to-digital converters (ADCs) and digital-to-analog converters (DACs) in all areas of the architecture, including system input and output interfaces (where possible), thus eliminating unnecessary power overhead and digital processing. Using analog input sensors gives greater data precision, which is particularly useful for time series data like audio processing. By avoiding digital quantization of the analog inputs, the analog neural network produces more accurate classification results for audio/voice. Further, the efficiency of analog compute reduces thermal concerns, chip size, and cost. Analog compute is low power, fast, small, and cheap; so why is it not more pervasive today?

The challenges to analog compute are many. Reaping the benefits of analog compute whilst avoiding the pitfalls is an engineering challenge.

Compute in memory

A common analog compute approach centers on the use of specialized (and costly) memory to store and multiply the weights for the neural network. Using NOR Flash, RRAM, MRAM or other specialty memory is being

pursued, but challenges ensuring reliable programming and long-term drift are common. Beyond programming and drift, specialty memories have two additional fundamental limitations:

1. They require expensive specialty processes that add significant cost (a typical NOR flash process adds 9 to 18 masking layers to a 28nm or 40nm process, which equates to ~15-30% additional cost). The complexity also increases cycle time, lowers yield, and hinders time to market and foundry portability.

2. Development and qualification of specialty memory is expensive, complex, and slow for foundries, resulting in limited specialized memory product roadmaps on advanced process nodes. A one-and-done technology node is a limitation for many specialty memory analog compute solutions.

Digital compute inherently limits efficiency

Variation challenges

In analog compute, it’s also common to deploy voltage and or current steering architectures. Process, voltage, and temperature (PVT) variation, as well as drift over-time, present challenging calibration and repeatability problems for most analog compute solutions. Additionally, ADCs and DACs with their non-linearities are used in large numbers in voltage and current steering solutions, resulting in a hybrid analog and digital architecture, which is possibly the worst of both worlds.

Blumind’s unique solution

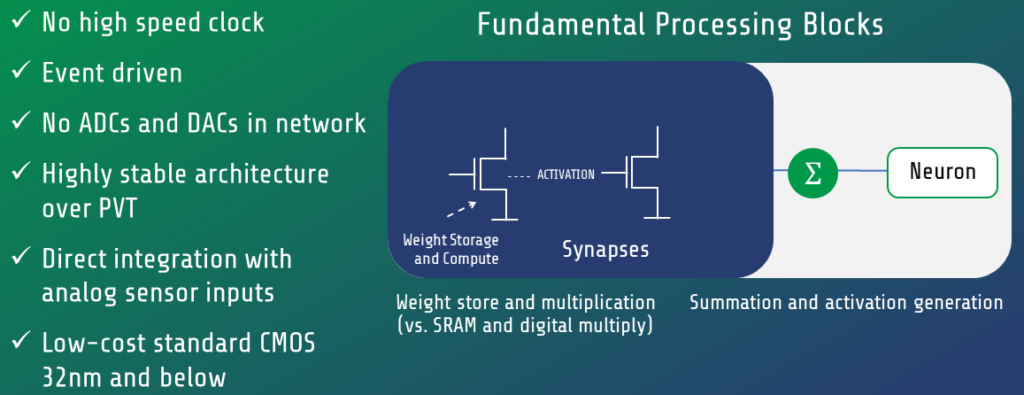

At Blumind, great care was taken developing our AMPL analog compute architecture to address the aforementioned limitations. Key architectural consideration include:

• Exploiting device physics on standard advanced CMOS processes with an established road map to advanced process nodes for all aspects of the design, including efficient multibit weight storage.

• Taking an all-analog approach to processing, including sub-threshold design and analog input sensor data (where possible), and by removing the need for on-chip ADCs and DACs.

• Removing high-speed on-chip clocks and using deterministic event-driven processing.

• Mitigating analog PVT and drift issues with a proprietary architecture and thus avoiding the pitfalls induced by precision voltage, current, and device matching.

• Use a standard software stack, such as PyTorch and TensorFlow, and eliminate complex compilers.

These novel architectural techniques, implemented on low-cost standard CMOS trailing nodes, have been proven in our recent silicon results with ultra-low latency and low power, unmatched in Edge voice/audio and vision applications.

Blumind’s all analog AMPL compute core

System solution advantages

For always-on keyword detection (KWD), the name of the game is battery life, and that comes down to the total system power for the always-on solution, including the microphone and the intelligent processor.

Blumind’s audio/voice solution can work with a MEMS analog or a digital microphone. Both microphones are available in standard packages from many vendors, so commodity sourcing is available for both options. However, using an analog microphone is typically 25% to 50% cheaper than similar digital microphone solutions and the power advantage is significant. Analog microphones use a lower supply voltage (typically 0.9V +/- 10%) and consume <20µW of active power vs. digital microphones that consume ~200µW, which is an order of magnitude higher.

Total system-level power consumption for Blumind’s always-on KWD solution is <30µW, including the analog microphone and advanced features like a 2-second integrated audio buffer (in always-on KWD applications the 2-second audio buffer is required to validate the key word and follow-on commands by subsequent natural language processing engines).

Compared to other implementations, which typically do not include the required buffer, the Blumind solution demonstrates a 20X total power advantage, meaning battery life can be extended from a day to nearly a month!