by Kavita Char, Principal Product Marketing Manager, Renesas Electronics.

Power your edge AI applications with new RA8 series MCUs from Renesas. The combination of AI and the IoT, also known as the Artificial Intelligence of Things (AIoT), enables the creation of “intelligent” devices that learn from data and make decisions without human intervention.

There are several drivers of this trend to build intelligence on edge devices:

• Decision-making on the edge reduces latency and costs associated with cloud connectivity and makes real-time operation possible.

• Lack of bandwidth to the cloud drives compute and decision making onto edge devices.

• Security is a key consideration; requirements for data privacy and confidentiality drive the need to process and store data on the device itself. AI at the edge, therefore, provides advantages of autonomy, lower latency, lower power, lower bandwidth requirements, lower costs, and higher security, all of which make it more attractive for emerging applications and use cases.

The AIoT has opened new markets for MCUs, enabling an increasing number of new applications and use cases that can employ MCUs paired with some form of AI acceleration to facilitate intelligent control on edge and endpoint devices. These AI-enabled MCUs provide a unique blend of DSP capability for compute and machine learning (ML) for inference and are being used in applications as diverse as keyword spotting, sensor fusion, and vibration analysis. Higher performance MCUs enable more complex applications in vision and imaging such as face recognition, fingerprint analysis, and object detection.

New high-performance AI MCUs

The new RA8 series MCUs from Renesas feature the Arm Cortex-M85 core based on the Arm v8.1M architecture and a 7-stage superscalar pipeline, providing the additional acceleration needed for compute intensive neural network (NN) and/or digital signal processing (DSP) tasks.

The Cortex-M85 is the highest performance Cortex-M core and comes equipped with Helium, the Arm M-Profile Vector Extension (MVE) introduced with the Armv8.1M architecture. Helium is a Single Instruction Multiple Data (SIMD) vector processing instruction set extension that can provide performance uplift by processing multiple data elements with a single instruction, such as repetitive multiply accumulates over multiple data. Helium significantly accelerates DSP and ML capabilities in resource constrained MCU devices, and it enables an unprecedented 4x acceleration in ML tasks and 3x acceleration in DSP tasks compared to the older Cortex-M7 core. Combined with large memory, advanced security, and a rich set of peripherals and external interfaces, RA8 MCUs are ideally suited for voice and vision AI applications, as well as compute intensive applications requiring signal processing support such as audio processing, JPEG decoding, and motor control.

What Helium enables

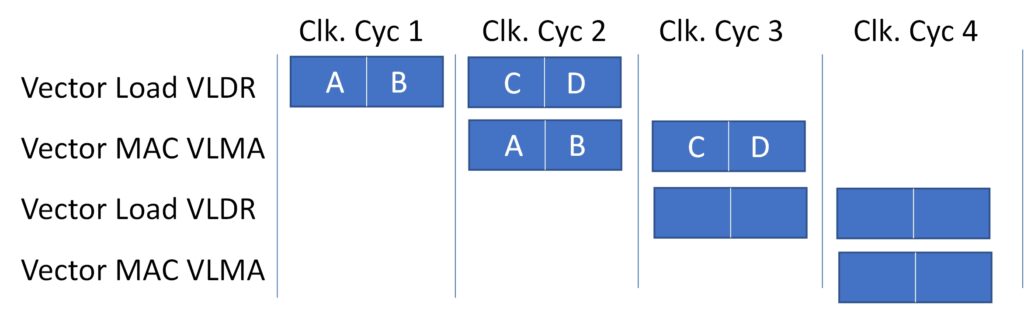

The Helium performance boost is enabled by processing wide 128-bit vector registers that can hold multiple data elements with a single instruction (SIMD). Multiple instructions may overlap in the pipeline execution stage. The Cortex-M85 is a dual-beat CPU core and can process two 32-bit data words in one clock cycle. A multiply- accumulate (MAC) operation requires a load from memory to a vector register followed by a multiply accumulate, which can happen at the same time as the next data is being loaded from memory. The overlapping of the loads and multiplies enables the CPU to have double the performance of an equivalent scalar processor without the area and power penalties.

Helium also introduces 150 new scalar and vector instructions for acceleration of DSP and ML. These features make a Helium- enabled MCU particularly suited for AI/ML and DSP-style tasks without any additional DSP or hardware AI accelerator in the system while also lowering costs and power consumption.

Vision AI and graphics apps

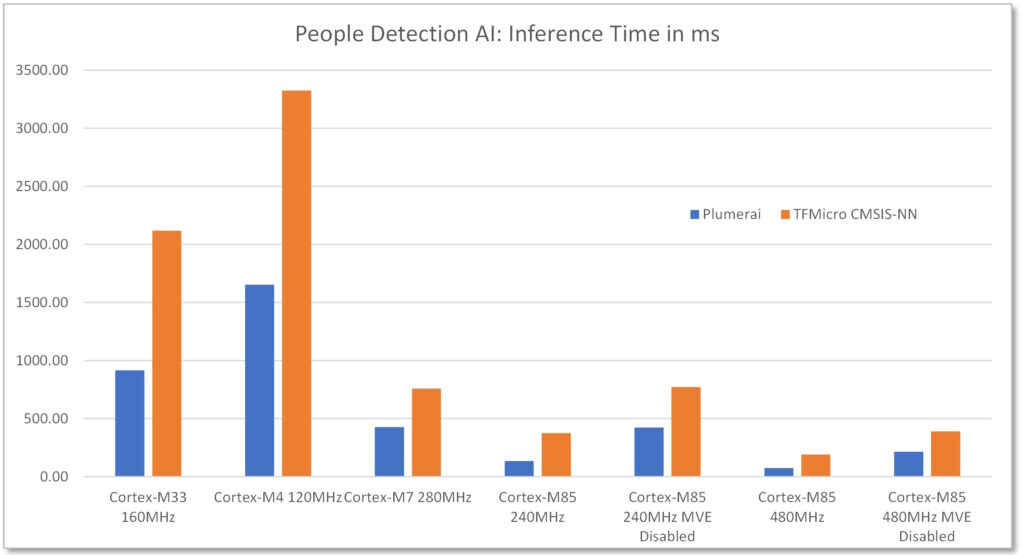

Renesas has successfully demonstrated the performance uplift with Helium in a variety of AI/ML use cases, showing significant improvement over a Cortex-M7 MCU—more than 3.6x in some cases.

One such application is a People Detection AI application developed in collaboration with Plumerai, a leading provider of vision AI solutions. Based on the RA8D1 MCU, the camera-based People Detection AI solution has been ported and optimized for the Helium-enabled Arm Cortex-M85 core, successfully demonstrating both the performance of the CM85 core and Helium as well as the graphics capabilities of RA8D1 devices.

Accelerated with Helium, the application achieves 3.6x performance uplift vs. Cortex-M7 core and 13.6 fps frame rate, which is a strong performance for an MCU without hardware acceleration. The demo platform captures live images from an OV7740 image- sensor-based camera at 640×480 resolution andpresents detection results on an attached 800×480 LCD display. The people detection software detects and tracks each person within the camera frame, even if partially occluded, and shows bounding boxes drawn around each detected person overlaid on the live camera display.

The Plumerai People Detection software uses a convolutional neural network (CNN) with multiple layers, trained with over 32 million labeled images. The layers that account for the majority of the total latency are Helium accelerated, such as the Conv2D and fully connected layers, as well as the depthwise convolution and transpose convolution layers.

The camera module provides images in YUV422 format, which is converted to RGB565 format for display on the LCD screen. The integrated 2D graphics engine on the RA8D1 then resizes and converts the RGB565 to ABGR8888 at 256×192 resolution for input to the NN. The people detection software executed on the Cortex-M85 core, then converts the ARBG8888 format to the NN model input format and runs the people detection inference function. Drawing functions using the 2D drawing engine on the RA8D1 are used to render the camera input to the LCD screen; also, to draw bounding boxes around detected people and present the frame rate. The people detection software uses roughly 1.2MB of flash and 320KB of SRAM, including the memory for the 256×192 ABGR8888 input image.

Benchmarking was performed to compare the latency of Plumerai’s People Detection solution as well as the same NN running with TFMicro with Arm’s CMSIS-NN kernels. Additionally, for the Cortex-M85, the performance of both solutions with Helium (MVE) disabled was also benchmarked. This benchmark data shows pure inference performance and does not include latency for the graphics functions, such as image format conversions. This application makes optimal use of all the resources available on the RA8D1:

• High-performance 480MHz processor.

• Helium for NN acceleration.

• Large flash and SRAM for storage of model weights

and input activations.

• Camera interface for capture of input images/video.

• Display interface to show the detection results.

Renesas has also demonstrated multi-modal voice and vision AI solutions based on RA8D1 devices that integrate visual wake words and face detection and recognition with speaker identification. RA8D1 MCUs with Helium can significantly improve neural network performance without the need for any additional hardware acceleration, thus providing a low-cost, low-power option for implementing

AI and ML use cases.