Welcome to a new world in which communication and computation is performed using light.

I love science fiction. One of my favorite scenes is the “Tears in rain” monologue by the character Roy Batty (portrayed by Rutger Hauer) in the 1982 Ridley Scott film “Blade Runner.” This classic scene starts with Roy saying: “I’ve seen things you people wouldn’t believe…” I know what he meant because I’ve recently been seeing things in the optoelectronics domain that even I don’t believe.

FMCW LiDAR

Most light detection and ranging (LiDAR) systems tend to be big, bulky, power-hungry, and expensive. These systems are based on a time-of-flight (TOF) approach, which means they generate powerful pulses of light and measure the round-trip time of any reflections.

By comparison, a relatively new incarnation called frequency modulated continuous wave (FMCW) LiDAR sends out a continuous laser beam at a much lower intensity than its pulsed TOF cousins. This form of LiDAR makes it possible to extract instantaneous depth, velocity, and polarization-dependent intensity on a per-pixel basis while remaining fully immune to environmental and multi-user interference. I was recently chatting to the folks at SiLC Technologies (www.silc.com) who have now demonstrated the ability to perceive, identify, and avoid objects at a range of more than 1 kilometer with their highly integrated Eyeonic FMCW LiDAR.

In-Chip Optical Interconnect

Another company that has developed some very interesting optoelectronics technology is Ayar Labs (www.ayarlabs.com). For a modern data center to offer peak performance, it’s necessary for data to be able to pass from chip-to-chip, shelf-to-shelf, and rack-to-rack at lightning speed. Unfortunately, the bandwidth requirements we expect to see in the very near future far exceed the capabilities of traditional copper-based electrical interconnect technologies.

The solution is to use optical interconnect, but it is not sufficient to take existing host devices (CPUs, GPUs, etc.) and then add external optics. The highest levels of performance can be achieved only by implementing the optical input/output (I/O) in the form of chiplets that are incorporated inside the host device package.

This is what the folks at Ayar Labs have done. As a result, it’s now possible for CPUs, GPUs, memory, and storage to be located tens, hundreds, or even thousands of kilometers from each other.

Optical AI

I recently had the opportunity to talk to a company called CogniFiber (www.cognifiber.com), whose tagline is “Computing @ The Speed of Light.”

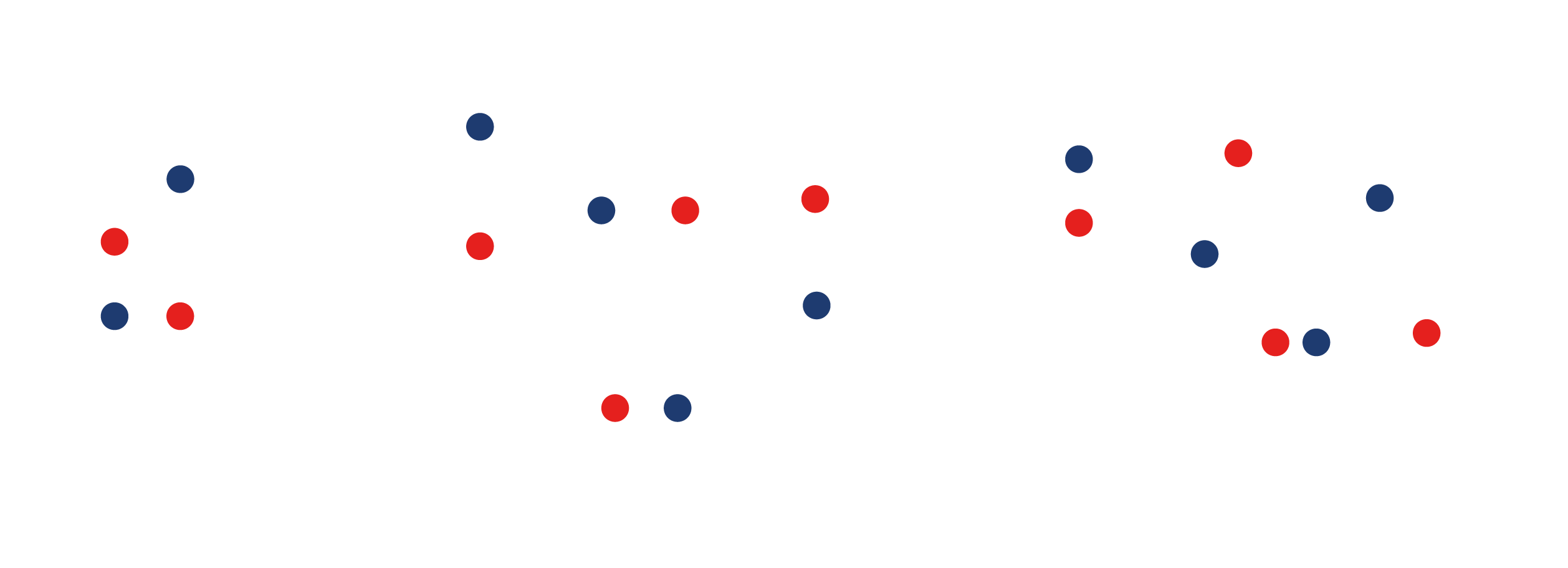

When most people think about the optical systems used in data communications, they assume optical cables containing multiple individual fibers. However, a new approach for ultra-high-capacity applications employs multicore fiber (MCF) involving special optical fibers containing multiple cores in a single cladding.

In traditional data communications applications, engineers strive to keep any crosstalk between cores as low as possible. By comparison, the folks at CogniFiber endeavor to maximize the crosstalk between cores and to use this crosstalk to perform AI/ML type computations.

What we are talking about here is a neural network implemented inside a single optical fiber composed of thousands of cores. Depending on the diameters and proximity of the cores, it’s possible to perform computations using fibers only a few centimeters long. The result of this in-fiber processing is to deliver a 100-fold boost in computational capabilities while consuming a fraction of the power of a traditional semiconductor-based solution. We truly do live in interesting times.