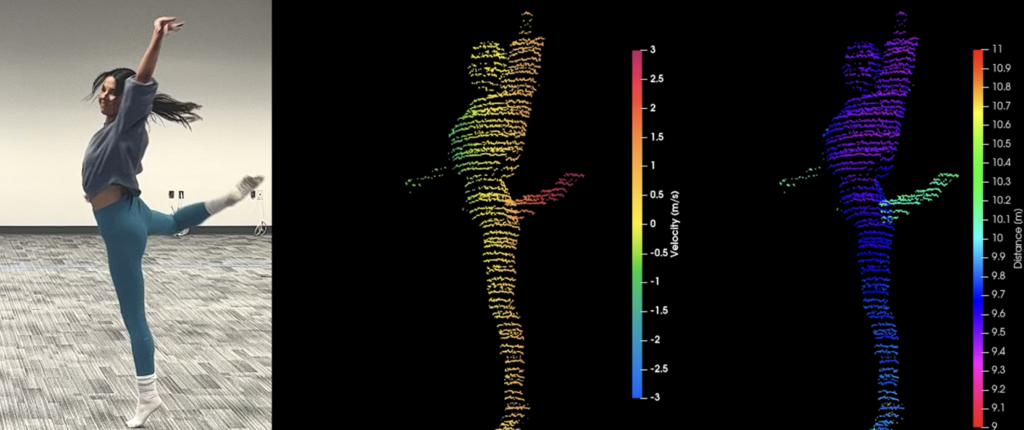

Direct imaging of movement and depth brings human-like vision to machines.

Human vision has evolved to focus on the detection of movement. This has been critical to our survival in a hostile and dynamic environment, making the difference between catching food or being food. More than 90% of our eye’s resources work to recognize motion. These resources are hardwired to enable fast subconscious reactions, hand-eye coordination, and the ability to undertake predictive behavior. The eye enables these by processing images and sending these not only to the brain for visual perception but also to critical muscles in the human body via our subconscious.

In contrast, today’s machine vision systems are based on camera systems that have been developed for image recording and not processing. These work by taking a series of still images, each of which captures only a fraction of the available information, and then using very expensive and power-hungry computing to stipulate lost information. For example, frame-by-frame comparisons of pixels are used to analyze motion. In order to get machines out of factories and integrated into our society, we need to enable them with human-like vision. This requires image sensors with a high level of intelligence built into them, like the human eye. These sensors must be able to capture motion directly and provide real-time, accurate information that enables rapid reactions.

True machine autonomy requires human-like vision

Today’s industrial infrastructure is based on utilizing machines in human constructed and highly controlled environments where they are dealing with predetermined, well-orchestrated movements. Here, standard camera vision suffices, and any depth sensation required can be achieved through any number of known (typically geometrical) techniques. However, we are entering the Golden Age of AI where we enable machines/robots to get far more involved in our society and economic growth. To get machines out of the controlled environment of the factories and into our everyday lives where complex and unpredictable movements come in abundance, human-like vision, with direct motion sensing, is necessary.

Despite the critical role of motion in perception, to date the key approach to extracting movement information has been based on computationally expensive, slow, and inaccurate methods that rely on analysis of still images (typically taken from multiple angles). Very few, if any, companies are focused on direct perception of motion. No one has demonstrated a cost-effective and accurate implementation for direct measurement of velocity and movement. This is due to the complex nature of capturing accurate motion information from moving objects.

The Eyeonic Vision Chip

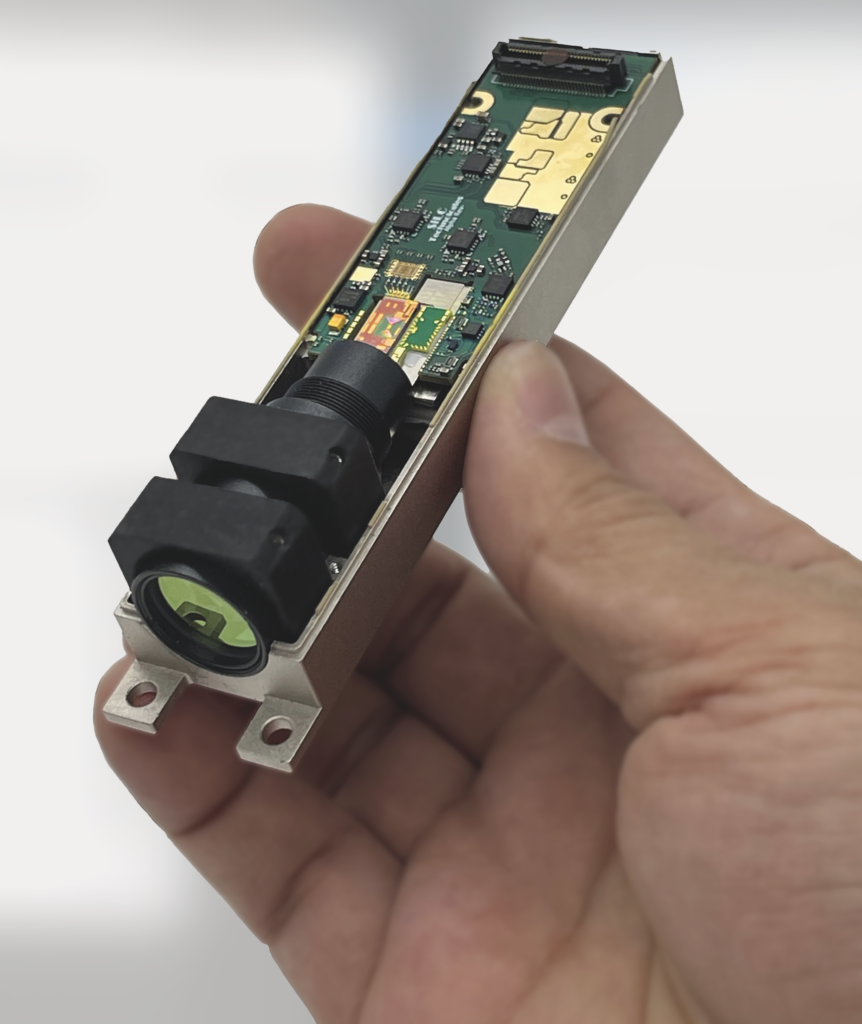

SiLC, which stands for “Silicon Light Chip,” is the only company that has demonstrated the ability to directly capture motion and depth with a fully integrated optical chip in silicon. SiLC’s recently announced Eyeonic Vision Sensor utilizes a unique silicon photonics technology, which is able to get the cost and size of this solution to a point where it can be a true companion to the widespread CMOS imager inside every camera.

The best method for the direct measurement of velocity utilizes doppler shift in the frequency of sound or light (EM) waves as they reflect from moving objects. Radar technology has successfully deployed this method to measure the velocity of large objects, such as airplanes and cars, but this approach suffers from very low image resolution and an inability to perceive stationary objects accurately. The use of light can offer much higher resolution (close to human-level perception), but the required components and devices are complex and require very high-performance specifications. This is beyond what today’s optical technology, deployed in data communication or military applications, can offer cost effectively.

This is where SiLC’s Eyeonic Vision Sensor comes in. SiLC’s unique integration technology enables it to integrate the complex optical functionalities needed for optical-based doppler motion perception. SiLC can do this with a single silicon chip made using standard CMOS manufacturing processes. The Eyeonic Vision Sensor can also measure the shape and distance of objects with high precision at great distances. The key here is that SiLC can perform this complex integration and still achieve the very high level of performance required. SiLC’s unique and patented technology and manufacturing process have been specifically developed and optimized for this purpose. This means that its technology achieves 10 to 100X better performance across a range of key metrics that are important to this application as compared to other available technology offerings.

SiLC recently introduced a full solution around its Eyeonic Vision Sensor chip. The Eyeonic Vision System is the industry’s most powerful and compact FMCW LiDAR solution. The system features the highest resolution, highest precision, and longest range, and is the only LiDAR solution to offer polarization information. The Eyeonic Vision System brings the highest levels of visual perception to applications that need to perceive and identify objects even at distances of greater than 1 kilometer, and yet, remains eye-safe and free from multi-user interference and background light.

Since the Eyeonic Vision Sensor also uniquely offers polarization intensity data, it can be used to help with material detection and surface analysis. Its ability to do this in a small, cost-effective silicon chip, which is manufactured using silicon wafer processes similar to CMOS imaging chips, enables it to complement the functionality offered by existing cameras. It does this at a size and cost point that can reinvent the camera as we know it today, enabling next-generation cameras to function in a way that closely resembles the human eye.

There are many use cases for the Eyeonic Vision System across a wide range of markets. Examples include self-driving cars, household and delivery robots, cameras that analyze motion to automatically generate statistics, gesture control, and applications in VR and AR. All of these applications and many more require the capture and analysis of motion and will have a major impact on our lives and future economic growth.